Beyond Your Phone: What Makes a Smart Glasses App Smart?

Smart Glasses are not just a “smartwatch for your face”as they were once considered. The watch is designed to be out of the way--something you glance at from time to time. Glasses are always present, always on. They can't be more different as application platforms, and with recent breakthroughs in design and AI they’re poised to become a major consumer success. Meta’s recent Ray-Ban sales reports indicate we’re just at the beginning of the consumer demand curve for this technology.

Smart Glasses have unique capabilities that set them apart from mobile or even current XR headset platforms. Truly great Smart Glasses apps use them to create exclusive experiences that can only exist on these devices. Here’s what really sets Smart Glasses apps apart from what you’re typically used to:

Contextual Awareness

A true Smart Glasses app, whether full AR spectacles or merely AI-assisted smart glasses, has the unique ability to pinpoint the user's exact position in the real world and analyze what the user is seeing--whether through live streaming video or ingesting snapshots from the device’s cameras. Most current glasses either have some form of GPS support. This fusion of information about the local environment through vision and the user’s GPS coordinate opens up fascinating possibilities.

Sure, smartphones have cameras and GPS as well, but the difference here is that for glasses these features are ‘always on’. For apps that use vision on mobile, you have to launch an app and awkwardly hold your phone out for it to see your surroundings. With glasses, this is effortless. When launched, the app starts seeing what you see--no extra effort required.

Artificial Intelligence // Voice

There aren’t many input mechanisms on Smart Glasses. Even with full hand tracking on Snap’s Spectacles, it’s not unreasonable to assume the user’s hands may be occupied by other tasks while running the app. Or, an app can involve analyzing the user’s current actions that occupy the hands--such as swinging a golf club or performing sign language. Also, the aforementioned context Smart Glasses have provides a lot of information for LLMs to chew on. This makes the ability to have a conversational interface with an LLM a critical element of the Smart Glasses experience.

AI Chef: An example of an AI-powered voice interface for AR Smart Glasses

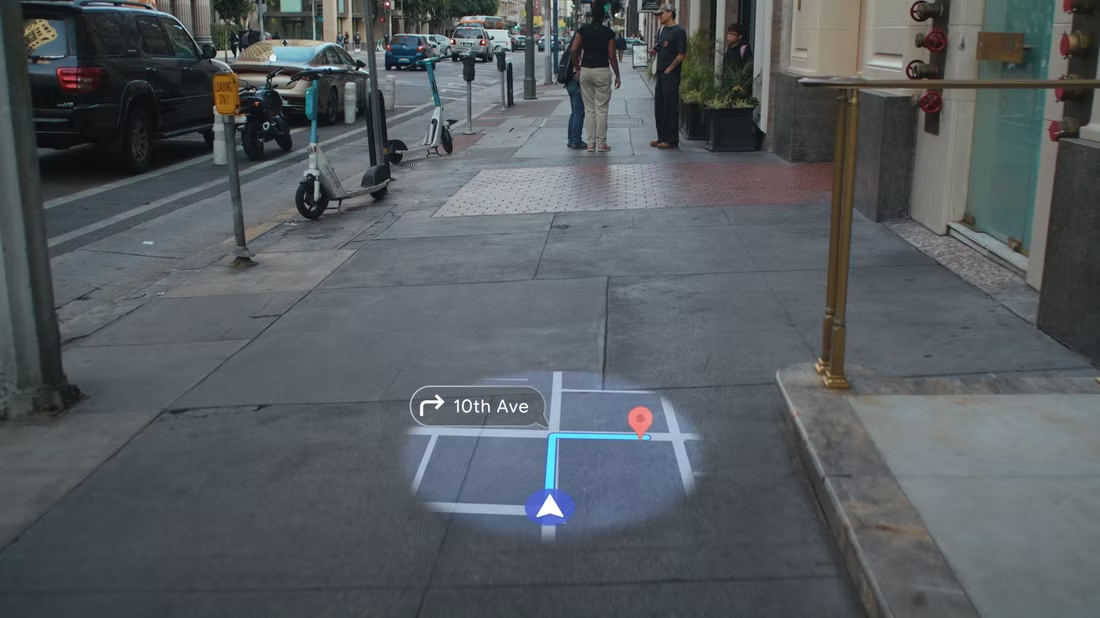

Imagine, navigating a city with an AR overlay as you walk back to your apartment, arms full of groceries. Or, a Smart Glasses app that helps you properly trim a fruit tree in your backyard using AI and visual guidance--while you’re holding the trimmer, the glasses are telling you what and where to cut. You actually don’t have to imagine that far in the future, as I’ve done the latter using my Meta Ray-Bans and a live AI session.

By using an audio interface driven by an LLM, a Smart Glasses app provides deep functionality without having any physical user interaction or even a visible GUI. Due to UI limitations, it’s imperative for AI agents to have the ability to perform tasks with minimal input from the user. Also, voice interfaces linked to smart AI agents allow for smart glasses apps to offload a lot of functionality to the cloud, helping with the power restrictions of the hardware.

Additive Display // Display Management

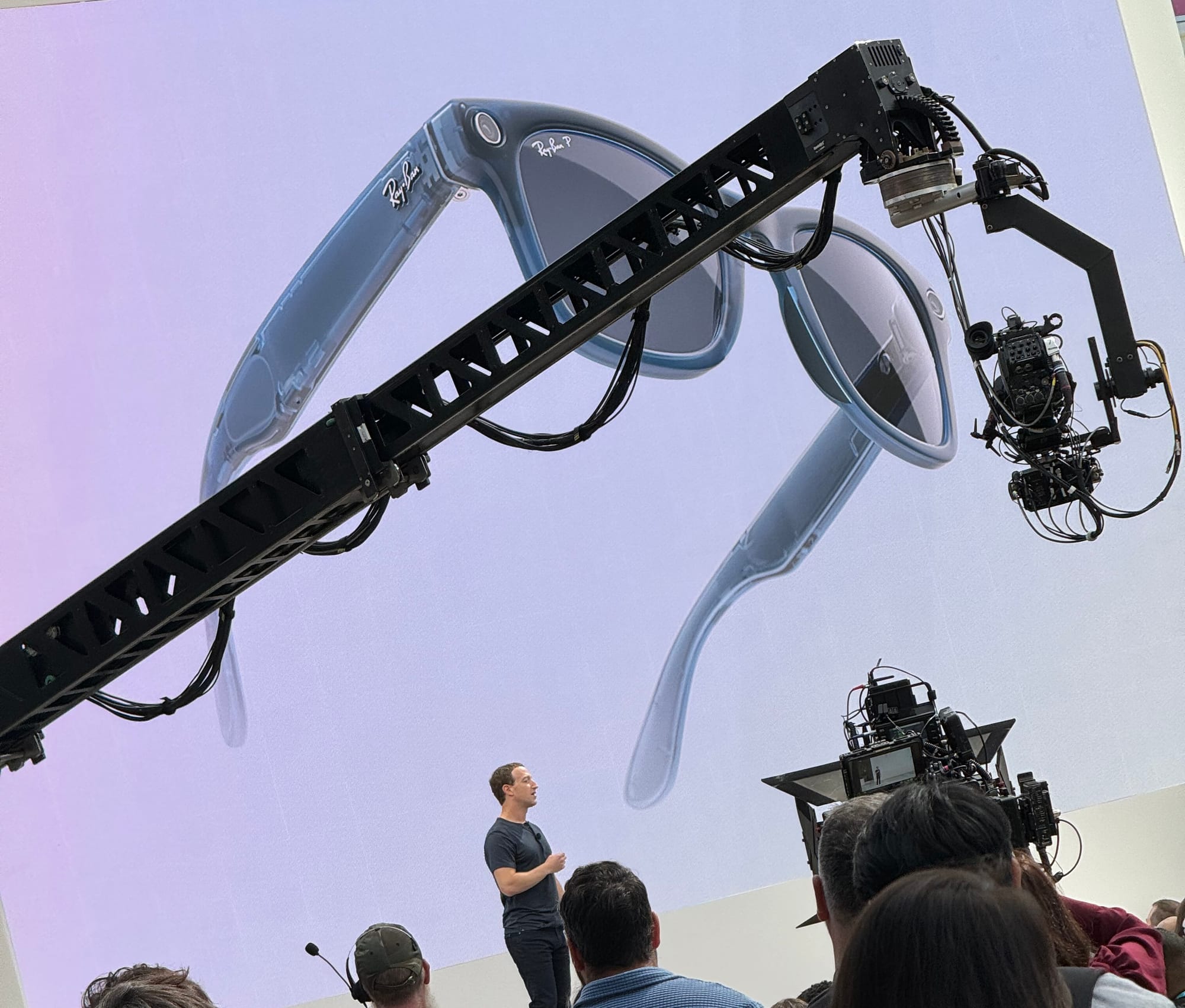

When people think of smart glasses, they often think of a sci-fi Iron Man style AR experience. Meta’s Orion definitely aims to hit this goal in a surprisingly small form factor. But this kind of fully immersive AR display isn’t absolutely necessary for a successful consumer device. Even Meta describes this as "the future" while they have more modest plans for the near term.

This is good because power and thermal restrictions make an always-on, immersive waveguide display difficult to pull off. Running a Spectacles Lens continuously might allow you to squeak out 45 minutes of runtime before the battery dies. Thermal restrictions may end up throttling performance well before then. Luckily, a lot can be done with just a simple waveguide HUD, as seen in Google’s Android XR glasses demos earlier this year.

For a truly successful Smart Glasses app to gain mass acceptance it has to be able to also run with the display optional. Some features of the app should be useful with audio only with no visual feedback. This allows the app to run in a power efficient mode with the display only being used at critical moments.

In fact, before the latest Snap Spectacles, the previous AR Snap Spectacles 4 had a low power no-display mode for Lenses that I’d like to see Snap bring back. Power management features are a critical factor to making a Smart Glasses a device you can use all day.

What’s Next?

Next up, we’ll dig into the details of some award-winning Smart Glasses apps on Spectacles and other platforms, and speak to the developers behind them (including myself, I suppose!).

Subscribe to Glassist so you don’t miss out!

Oh, and what applications would you like to see on current and future Smart Glasses platforms? I’m always looking for inspiration for my next Snap Spectacles projects.